You are amazing

++ Jade/Pug is a terse and simple + templating language with + a focus on performance + and powerful features. +

+Hello ` + hello__1 = `!

` +) +func Jade_hello(word string, wr io.Writer) { + buffer := &WriterAsBuffer{wr} + buffer.WriteString(hello__0) + WriteEscString(word, buffer) + buffer.WriteString(hello__1) +} +``` + +`main.go` +```go +package main +//go:generate jade -pkg=main -writer hello.jade + +import "net/http" + +func main() { + http.HandleFunc("/", func(wr http.ResponseWriter, req *http.Request) { + Jade_hello("jade", wr) + }) + http.ListenAndServe(":8080", nil) +} +``` + +output at localhost:8080 +```html +Hello jade!

+``` + +### github.com/Joker/jade package +generate [`html/template`](https://pkg.go.dev/html/template#hdr-Introduction) at runtime +(This case is slightly slower and doesn't support[^2] all features of Jade.go) + +```go +package main + +import ( + "fmt" + "html/template" + "net/http" + + "github.com/Joker/hpp" // Prettify HTML + "github.com/Joker/jade" +) + +func handler(w http.ResponseWriter, r *http.Request) { + jadeTpl, _ := jade.Parse("jade", []byte("doctype 5\n html: body: p Hello #{.Word} !")) + goTpl, _ := template.New("html").Parse(jadeTpl) + + fmt.Printf("output:%s\n\n", hpp.PrPrint(jadeTpl)) + goTpl.Execute(w, struct{ Word string }{"jade"}) +} + +func main() { + http.HandleFunc("/", handler) + http.ListenAndServe(":8080", nil) +} +``` + +console output +```html + + + +Hello {{.Word}} !

+ + +``` + +output at localhost:8080 +```html +Hello jade !

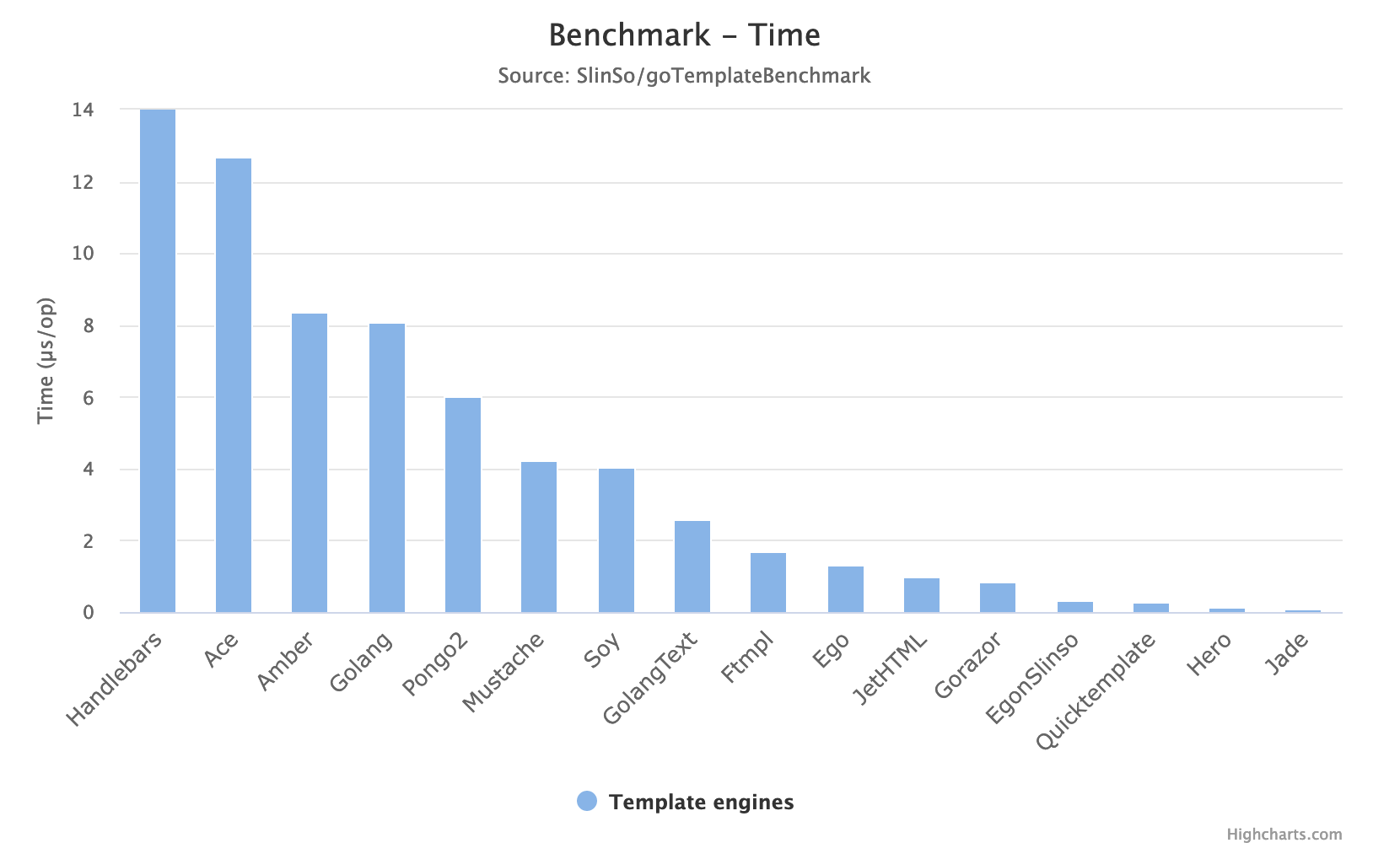

+``` + +## Performance +The data of chart comes from [SlinSo/goTemplateBenchmark](https://github.com/SlinSo/goTemplateBenchmark). + + +## Custom filter :go +This filter is used as helper for command line tool +(to set imports, function name and parameters). +Filter may be placed at any nesting level. +When Jade used as library :go filter is not needed. + +### Nested filter :func +``` +:go:func + CustomNameForTemplateFunc(any []int, input string, args map[string]int) + +:go:func(name) + OnlyCustomNameForTemplateFunc + +:go:func(args) + (only string, input float32, args uint) +``` + +### Nested filter :import +``` +:go:import + "github.com/Joker/jade" + github.com/Joker/hpp +``` + +#### note +[^1]: + `Usage: ./jade [OPTION]... [FILE]...` + ``` + -basedir string + base directory for templates (default "./") + -d string + directory for generated .go files (default "./") + -fmt + HTML pretty print output for generated functions + -inline + inline HTML in generated functions + -pkg string + package name for generated files (default "jade") + -stdbuf + use bytes.Buffer [default bytebufferpool.ByteBuffer] + -stdlib + use stdlib functions + -writer + use io.Writer for output + ``` +[^2]: + Runtime `html/template` generation doesn't support the following features: + `=>` means it generate the folowing template + ``` + for => "{{/* %s, %s */}}{{ range %s }}" + for if => "{{ if gt len %s 0 }}{{/* %s, %s */}}{{ range %s }}" + + multiline code => "{{/* %s */}}" + inheritance block => "{{/* block */}}" + + case statement => "{{/* switch %s */}}" + when => "{{/* case %s: */}}" + default => "{{/* default: */}}" + ``` + You can change this behaviour in [`config.go`](https://github.com/Joker/jade/blob/master/config.go#L24) file. + Partly this problem can be solved by [custom](https://pkg.go.dev/text/template#example-Template-Func) functions. diff --git a/vendor/github.com/Joker/jade/config.go b/vendor/github.com/Joker/jade/config.go new file mode 100644 index 0000000000..5e63083ae3 --- /dev/null +++ b/vendor/github.com/Joker/jade/config.go @@ -0,0 +1,372 @@ +package jade + +import "io/ioutil" + +//go:generate stringer -type=itemType,NodeType -trimprefix=item -output=config_string.go + +var TabSize = 4 +var ReadFunc = ioutil.ReadFile + +var ( + golang_mode = false + tag__bgn = "<%s%s>" + tag__end = "" + tag__void = "<%s%s/>" + tag__arg_esc = ` %s="{{ print %s }}"` + tag__arg_une = ` %s="{{ print %s }}"` + tag__arg_str = ` %s="%s"` + tag__arg_add = `%s " " %s` + tag__arg_bgn = "" + tag__arg_end = "" + + cond__if = "{{ if %s }}" + cond__unless = "{{ if not %s }}" + cond__case = "{{/* switch %s */}}" + cond__while = "{{ range %s }}" + cond__for = "{{/* %s, %s */}}{{ range %s }}" + cond__end = "{{ end }}" + + cond__for_if = "{{ if gt len %s 0 }}{{/* %s, %s */}}{{ range %s }}" + code__for_else = "{{ end }}{{ else }}" + + code__longcode = "{{/* %s */}}" + code__buffered = "{{ %s }}" + code__unescaped = "{{ %s }}" + code__else = "{{ else }}" + code__else_if = "{{ else if %s }}" + code__case_when = "{{/* case %s: */}}" + code__case_def = "{{/* default: */}}" + code__mix_block = "{{/* block */}}" + + text__str = "%s" + text__comment = "" + + mixin__bgn = "\n%s" + mixin__end = "" + mixin__var_bgn = "" + mixin__var = "{{ $%s := %s }}" + mixin__var_rest = "{{ $%s := %#v }}" + mixin__var_end = "\n" + mixin__var_block_bgn = "" + mixin__var_block = "" + mixin__var_block_end = "" +) + +type ReplaseTokens struct { + GolangMode bool + TagBgn string + TagEnd string + TagVoid string + TagArgEsc string + TagArgUne string + TagArgStr string + TagArgAdd string + TagArgBgn string + TagArgEnd string + + CondIf string + CondUnless string + CondCase string + CondWhile string + CondFor string + CondEnd string + CondForIf string + + CodeForElse string + CodeLongcode string + CodeBuffered string + CodeUnescaped string + CodeElse string + CodeElseIf string + CodeCaseWhen string + CodeCaseDef string + CodeMixBlock string + + TextStr string + TextComment string + + MixinBgn string + MixinEnd string + MixinVarBgn string + MixinVar string + MixinVarRest string + MixinVarEnd string + MixinVarBlockBgn string + MixinVarBlock string + MixinVarBlockEnd string +} + +func Config(c ReplaseTokens) { + golang_mode = c.GolangMode + if c.TagBgn != "" { + tag__bgn = c.TagBgn + } + if c.TagEnd != "" { + tag__end = c.TagEnd + } + if c.TagVoid != "" { + tag__void = c.TagVoid + } + if c.TagArgEsc != "" { + tag__arg_esc = c.TagArgEsc + } + if c.TagArgUne != "" { + tag__arg_une = c.TagArgUne + } + if c.TagArgStr != "" { + tag__arg_str = c.TagArgStr + } + if c.TagArgAdd != "" { + tag__arg_add = c.TagArgAdd + } + if c.TagArgBgn != "" { + tag__arg_bgn = c.TagArgBgn + } + if c.TagArgEnd != "" { + tag__arg_end = c.TagArgEnd + } + if c.CondIf != "" { + cond__if = c.CondIf + } + if c.CondUnless != "" { + cond__unless = c.CondUnless + } + if c.CondCase != "" { + cond__case = c.CondCase + } + if c.CondWhile != "" { + cond__while = c.CondWhile + } + if c.CondFor != "" { + cond__for = c.CondFor + } + if c.CondEnd != "" { + cond__end = c.CondEnd + } + if c.CondForIf != "" { + cond__for_if = c.CondForIf + } + if c.CodeForElse != "" { + code__for_else = c.CodeForElse + } + if c.CodeLongcode != "" { + code__longcode = c.CodeLongcode + } + if c.CodeBuffered != "" { + code__buffered = c.CodeBuffered + } + if c.CodeUnescaped != "" { + code__unescaped = c.CodeUnescaped + } + if c.CodeElse != "" { + code__else = c.CodeElse + } + if c.CodeElseIf != "" { + code__else_if = c.CodeElseIf + } + if c.CodeCaseWhen != "" { + code__case_when = c.CodeCaseWhen + } + if c.CodeCaseDef != "" { + code__case_def = c.CodeCaseDef + } + if c.CodeMixBlock != "" { + code__mix_block = c.CodeMixBlock + } + if c.TextStr != "" { + text__str = c.TextStr + } + if c.TextComment != "" { + text__comment = c.TextComment + } + if c.MixinBgn != "" { + mixin__bgn = c.MixinBgn + } + if c.MixinEnd != "" { + mixin__end = c.MixinEnd + } + if c.MixinVarBgn != "" { + mixin__var_bgn = c.MixinVarBgn + } + if c.MixinVar != "" { + mixin__var = c.MixinVar + } + if c.MixinVarRest != "" { + mixin__var_rest = c.MixinVarRest + } + if c.MixinVarEnd != "" { + mixin__var_end = c.MixinVarEnd + } + if c.MixinVarBlockBgn != "" { + mixin__var_block_bgn = c.MixinVarBlockBgn + } + if c.MixinVarBlock != "" { + mixin__var_block = c.MixinVarBlock + } + if c.MixinVarBlockEnd != "" { + mixin__var_block_end = c.MixinVarBlockEnd + } +} + +// + +type goFilter struct { + Name, Args, Import string +} + +var goFlt goFilter + +// global variable access (goFlt) +func UseGoFilter() *goFilter { return &goFlt } + +// + +type itemType int8 + +const ( + itemError itemType = iota // error occurred; value is text of error + itemEOF + + itemEndL + itemIdent + itemEmptyLine // empty line + + itemText // plain text + + itemComment // html comment + itemHTMLTag // htmlHello jade!